| Version 34 (modified by , 2 years ago) ( diff ) |

|---|

Vehicular AI Agent Development

Site

Developing and Testing Vehicular AI Agents

Team: William Ching, Romany Ebrhem, Aditya Kaushik Jonnavittula, Vibodh Singh, Haejin Song, Eddie Ward, Donald Yubeaton

Project Advisor and Mentor: Professor Jorge Ortiz and Navid Salami Pargoo

Project Overview:

This project will create a realistic intersection simulation environment and use cutting-edge technology to design and implement it and analyze traffic data to improve its accuracy. Additionally, students will train an in-vehicle AI agent to interact with drivers and test its performance in different situations. Using a VR headset and remote control car with a first-person view camera, you’ll gain valuable insights into the capabilities and limitations of advanced AI agents.

Project Journey:

Hello 👋 and welcome to our page for our Research Project at WINLAB summer 2023! We are a passionate team of highly motivated students looking to make a meaningful impact and cultivate our knowledge. We have weekly team meetings on Mondays 12:00pm E.S.T and work with the Testing Vehicular AI Agent research team. We also have weekly presentations on Thursdays 2:00pm E.S.T to showcase our project milestones and achievements. You can use the table of contents below to navigate our page to review our work throughout the internship program.

Table of Contents

-- Week 1 Contents --

-- Week 2 Contents --

-- Week 3 Contents --

-- Week 4 Contents --

-- Week 5 & 6 Contents --

-- Week 7 Contents --

-- Week 8 & 9 Contents --

-- Week 10 Contents --

-- Acknowledgement and References --

Week 1

Goals

- Team introductions

- Kickoff meetings

- Refining research questions

Summary

During the first week, We engaged in various activities to set the foundation for our research project. We came together and hold introductory meetings to foster collaboration and establish a common goal. We introduced ourselves, shared our backgrounds, and discussed our areas of expertise. We have reviewed and scoped the research goals and objectives. We identified the research questions and determined the specific outcomes we aim to achieve.

In addition, we explored the existing literature space and conducted initial research to gather relevant information and insights related to our research project. We delved into previous studies, scholarly articles, and other resources to understand the current state of knowledge in our research area.

Overall, the first week involved team introductions, goal clarification, literature review, scheduling, and establishing research protocols to lay the groundwork for a productive and successful research project.

Next Steps

- Read CARLA (Car Learning to Act) Documentation

- Learn about how we can set up Carla to simulate real-world traffic scenarios

- Become familiar using Orbit Lab machines

Resources

- [Week 1 Slides Here]

- [Poster Abstract: Multi-sensor Fusion for In-cabin Vehicular Sensing Applications]

- [Learning When Agents Can Talk to Drivers Using the INAGT Dataset and Multisensor Fusion]

- [Toward an Adaptive Situational Awareness Support System for Urban Driving]

Week 2

Goals

- Learn about CARLA simulator

- Set up CARLA simulator on nodes / machines of Orbit Lab

- Learn about sensors in CARLA simulator

Summary

During the second week, we started to refine the scope of the research project. We have also attended daily workshops to learn about various technologies, such as Linux, Python, ROS, etc. These workshop were highly relevant to our research project, especially when learning about the Python API that we will be using to interact with the Carla simulation. Because of the Introduction to Linux workshops, we have learned about the Orbit Lab and explored the use cases. We hope to be able to leverage the computing power on the nodes to run the CARLA simulator and train and/or finetune models.

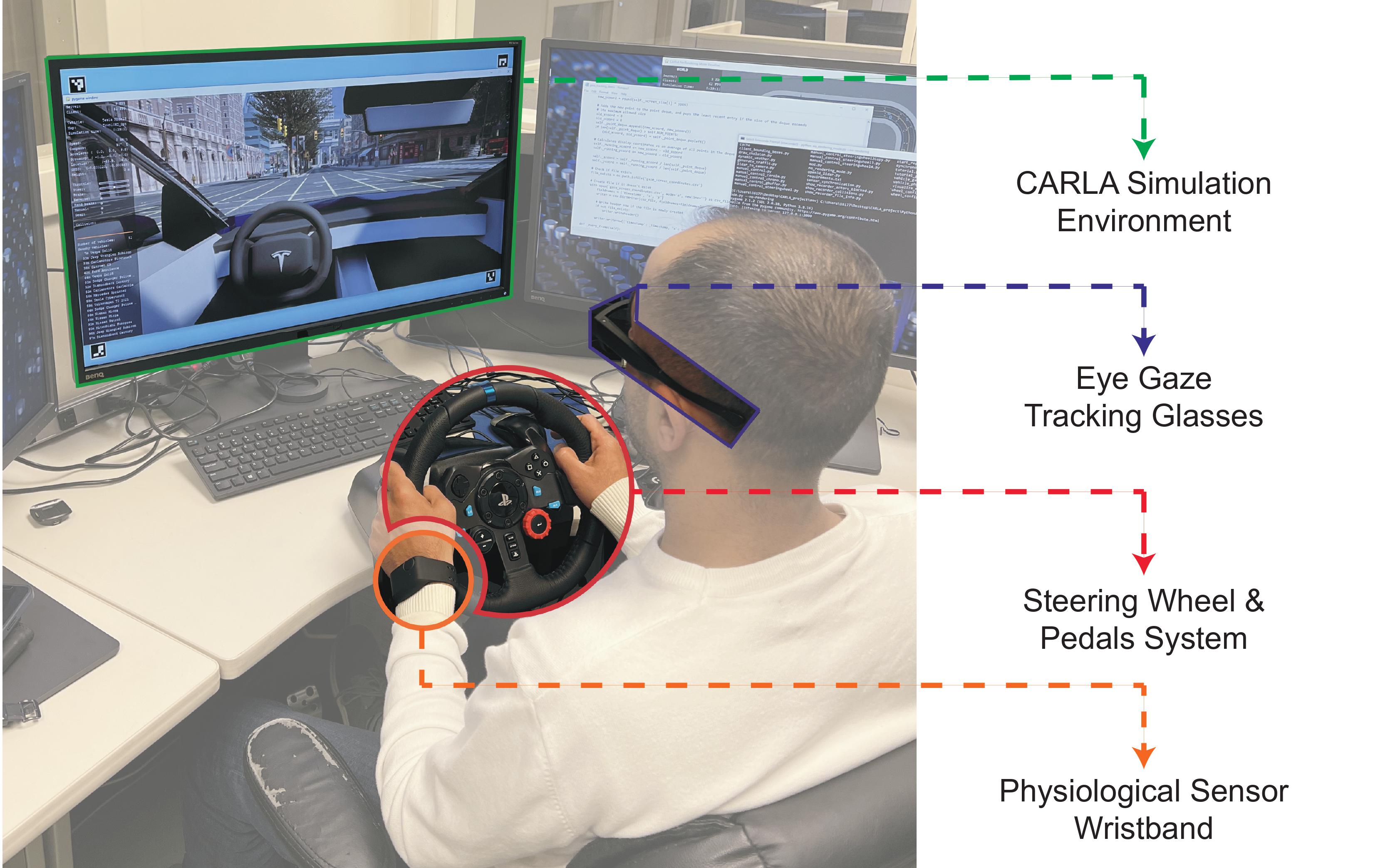

We have attempted to set up CARLA simulator on our own personal laptops. Setting up CARLA simulator on our own machines did not come without challenges. Not all of our machines had the capabilities or compute power to run the CARLA simulator. The dependencies to run CARLA simulator is strict with versioning and not all of us had the correct versions for the dependencies (e.g. There is currently no support for Python 3.10 or later). After a team huddle, we were able to access the main computer at WINLAB with a simulated driving setup / rig and ran CARLA simulator. Upon running the CARLA Simulator, we explored the many sensors in CARLA. We have experimented with data collection and data visualization.

Once we became familar with the CARLA architecture, we began to brainstorm our data collection process. We planned to use PostgreSQL as our database for storing sensor data, along with a REST API.

The first image shows CARLA Logo (Source: CARLA Page). The second image shows the rig / setup of the main computer for running CARLA simulator (Source: [Poster Abstract: Multi-sensor Fusion for In-cabin Vehicular Sensing Applications]).

Next Steps

- Create Database and Schemas / models for data we plan to collect

- Create REST API

Resources

Week 3

Goals

- Set up REST API and database

- Fix data visualization from various CARLA camera sensors

Summary

During this week, we spent some time discussing how we will be storing the data that will be collected from CARLA sensors. We have decided to use Django along with django-rest-framework to create the REST API and connect it to our PostgreSQL database. We were able to successfully implement the RGB image model/schema and successfully test the Create, Read, Update, and Delete operations with the RGB model. In other words, we are now able to store and retrieve RGB images from the database through the REST API.

While experimenting with CARLA cameras, we ran into some bugs where the image being collected was corrupted and did not display a clear image. The images appeared to be distorted with fast-moving horizontal stripes. Our team spent some time debugging the issue and reading between the lines of the code.

The first image shows Django-rest-framework's logo (Source: [django-rest-framework main page]). The second image shows the image distortion bug that we were getting as a result of visualizing the data from the RGB camera sensor in the CARLA simulator.

The image above shows a sample image that was successfully collected from the RGB Camera sensor in CARLA Simulator.

Next Steps

- Learn about Scenario Runner in CARLA

- Brainstorm some scenarios to set up in CARLA simulator

- Begin analyzing at PazNet architecture

Resources

Week 4

Goals

- Set up database on Orbit Node

- Computer vision in CARLA Simulator

- Understand Scenario Runner and create a basic Scenario

Summary

This week, we spent time understanding and learning the Scenario Runner dependency and implemented it with a basic scenario. Scenario runner allows us to create specific experiments where we can focus on collecting necessary data rather than using the free roam feature of the existing CARLA simulator. After spending time in the documentation and example code, we were able to create a simple route in which the ego vehicle has to drive forward, detect an obstacle, and get past it successfully.

We also worked on Computer vision to detect important elements in CARLA, such as traffic lights, other vehicles, and other common obstacles. Add more...

Next Steps

- Learn about Scenario Runner in CARLA

- Brainstorm some scenarios to set up in CARLA simulator

- Begin analyzing at PazNet architecture

Resources

Week 5 & 6

Goals

- Implement a GPS Minimap into Carla

- Create more complex scenarios

Resources

Week 7

Goals

- Connected minimap with Scenario Runner

- Made steering wheel work with Scenario Runner

- Worked on showing directions with Minimap

Next Steps

- Implement instructions into scenarios

- Implement route directions onto minimap

- Have all working features work on a much larger map

Resources

Week 8 & 9

Goals

- Displaying the waypoints in the GPS minimap.

- Collection of data based on the scenarios created in Carla.

- Loading and testing the scenarios in Town-12.

Summary

During weeks 8 and 9, we spent time implementing the waypoints in the GPS mini-map that we got working on in the previous weeks. This helps us set a path for the vehicle and track the vehicle in real time. Another objective that we achieved during this period was to load the Town-12 map in the Carla simulator. Town-12 is a vast map that spans 10x10 km² as compared to the standard map, which was 1.2 km².Town-12 also consists of various sections, such as a downtown area, farmland, woods, city, suburbs, and highways which helps in conducting larger experiments.

Next Steps

- Large-scale testing of scenarios.

- Data collection having different people drive in the scenarios.

- Compare the data of an unassisted run and an agent assisted run to the ideal scenarios criteria.

Resources

Week 10

Summary

During the final week, we spent time collecting statistical and graphical data by having our scenarios driven by different subjects. The data that was collected included the Braking data, the number of collisions that the subject had during the course of the scenario, the changes in the steering angle, the changes in the speed and also the lane invasions.

Future Goals

- Loading the Town-12 and having more scenarios in it for large-scale testing with the help of better hardware support.

- Integrating the Carla PazNet model and training the model using the data collected from scenarios.

Resources

Acknowledgement

We would like express our sincere gratitude to our advisor Professor Jorge Ortiz and our project mentor Navid Salami Pargoo for their invaluable guidance and support throughout the internship. We would like to thank Noreen DeCarlo, Jennifer Shane, and Ivan Seskar for giving us this wonderful learning experience at WINLAB.

References

- [1] T. Wu, N. S. Pargoo, and J. Ortiz, “Poster abstract: Multi-sensor fusion for in-cabin vehicular sensing applications,” in Proceedings of the 22nd International Conference on Information Processing in Sensor Networks, ser. IPSN ’23, San Antonio, TX, USA: Association for Computing Machinery, 2023, pp. 332–333, ISBN: 9798400701184. DOI: 10.1145/3583120.3589836. [Online]. Available: https://doi.org/10.1145/3583120.3589836.

- [2] T. Wu, N. Martelaro, S. Stent, J. Ortiz, and W. Ju, “Learning when agents can talk to drivers using the inagt dataset and multisensor fusion,” Proc. ACM Interact. Mob. Wearable Ubiquitous Tech-nol., vol. 5, no. 3, Sep. 2021. DOI: 10.1145/3478125. [Online]. Available: https://doi.org/10.1145/3478125.

- [3] A. Dosovitskiy, G. Ros, F. Codevilla, A. Lopez, and V. Koltun, “CARLA: An open urban driving simulator,” in Proceedings of the 1st Annual Conference on Robot Learning, 2017, pp. 1–16.

Attachments (5)

- image_bug.png (450.7 KB ) - added by 3 years ago.

- 107-12.63882027566433.png (587.1 KB ) - added by 3 years ago.

- Untitled.mp4 (1.6 MB ) - added by 3 years ago.

- Screen Shot 2023-07-31 at 11.18.48 AM.png (1.2 MB ) - added by 3 years ago.

-

Untitled.gif

(10.6 MB

) - added by 3 years ago.

scenerio1gif